The human face of interoperability

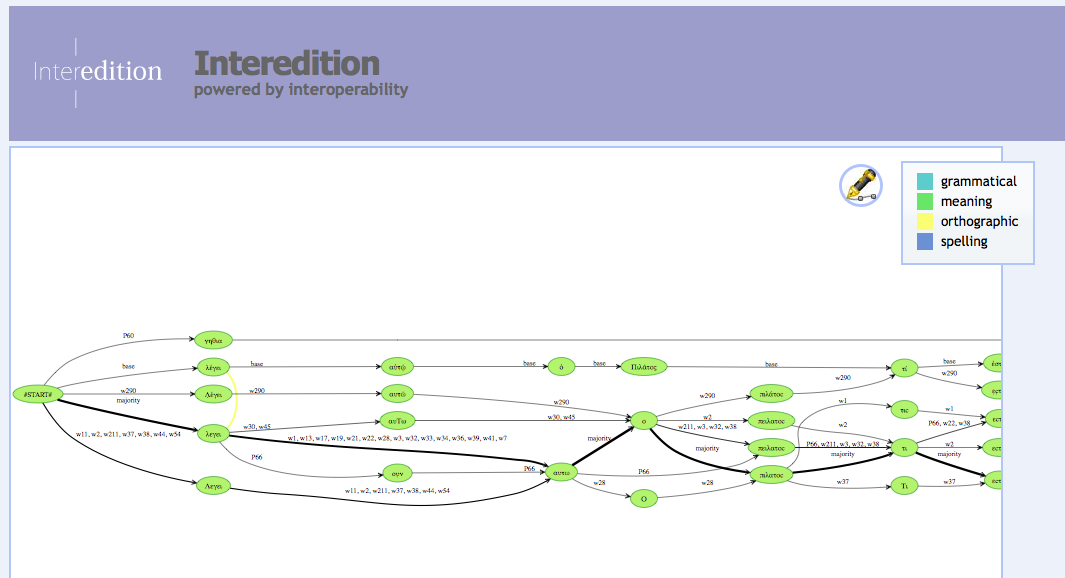

On 19 and 20 March 2012 a symposium in the context of Interedition was held at the Huygens Institute for the History of the Netherlands. Next to excellent papers presented by for instance Gregor Middell (http://bit.ly/KTBJp9) and Stan Ruecker (http://bit.ly/KTBVVm) a number of development projects were presented that showed very well what Interedition is all about. Interedition promotes and progresses the interoperability of tools used in Digital Textual Scholarship. A nice example was given by Tara Andrews (http://bit.ly/LjhKi6) and Troy Griffiths (http://bit.ly/NW1WCC). They showed how the same software component was used in separate local projects to realize various tool sets for stemmatological analysis, transcription and regularization of variant texts. The tool that they have developed –in collaboration with me– enables textual scholars to correct and adapt the results of a text collation through an interactive graph visualization (cf. Fig.1). I think their example showed well how collaboration can lead to efficient tool development and the reuse of digital solutions.

At the same time the collaborative work on this nifty graph based regularization tool might be a good example that shows that we need to interrogate some of the basic assumptions we tend to have about interoperability. Though before getting to that, because at the core Interedition is about interoperability, let me first explain what my understanding of interoperability is. Not as trivial a task as one might expect. I think it was already back in 2008 that I did a ‘grab bag’ lecture on Interedition. This is the type of lecture where you list a large number of topics and the number of minutes you could do a little talking on them. The audience then picks their favorite selection. A little disappointingly at the time nobody was interested in interoperability –I tend to think because none in the audience knew what the term even meant.

Interoperability (http://en.wikipedia.org/wiki/Interoperability) can be defined along various lines but simply put it is the ability of computer programs to share their information and to use each others’ functionalities. A familiar example is mail access. These days it doesn’t matter whether I check my mail using GMail, Outlook, Firebird, the inbuilt mail client of my smartphone, or on the iPad. All those programs and devices present the same mail data from the same mail server, and all of them can send mail through that server. The advantage is that everybody can work with the program he or she is most comfortable with, while the mail still keeps arriving. In short interoperability ensures that we can use data any time any place, so people can conveniently collaborate.

That it is about using the same data any time any place probably inspired the common ground that interoperability is a matter of data format standards. Pick one and all technology will work in harmony nicely. That of course is only very partially true. The format of data indeed does say something about its potential for exchange and multi-channeled use. But mostly format says something about applicability. “Nice that you marked up every stance in that text file, but I needed kind of all pronouns really…” Standardized formats unfortunately don’t necessarily add up to data being useful for other applications. For that it turns out to be more important that computer (or digital) processes rather than data are exchangeable. Within Interedition therefor we were interested in the ability to exchange functionality. Could we re-utilize the processes we run on data for others in ways that are more generalizable? Apart from all the research abilities that would rise from such, there was also a very pragmatic rationale for re-utilization of digital processes: with so few people available in the field of Digital Humanities that actually are able to create new digital functionality, it would be a pity to let them invent the same wheels independently.

In practice to reach interoperable solutions it is paramount to establish how digital functionality in humanities research can be crossbred to reuse the same code for several processes: “Where in your automated text collation workflow can my spelling normalizer tool be utilized?” Most essentially that is a question addressing our abilities to collaborate. Re-utilization and interoperability are driven by collaboration between people. Interoperability in that sense becomes more a matter of collaboratively formalizing and building discrete components of digital workflows, rather than the selection of a few standards. Standards area abundantly available anyway (http://www.dlib.indiana.edu/~jenlrile/metadatamap/). Connectable and reusable workflow components, not so much.

I think therefor that the most important lesson learned from Interedition until now is that interoperability is not essentially about tools and data, or about tools over data. It is about humans and interaction over tools and data. Interedition succeeded in gathering together a unique group of developers and researchers that were prepared and daring to go as far as it would take to understand each others’ languages, aims, and methods. That attitude generated new, useful, and innovative co-operations. The successful development of shared and interoperable software was almost a mere side effect of that intend to understand and collaborate. Stimulating and supporting a creative community full of initiative turns out to be pivotal –far more so than maintaining any technical focus. Thus interoperability is foremost about interoperability of people. The fact that it are the white ravens –those combining research and coding skills in one person– that push the boundaries of Digital Humanities time and time again is the ultimate proof for that.

(This is a translation of a blog post that appeared originally in Dutch on http://www.huygens.knaw.nl/de-menselijke-kant-van-interoperabiliteit/#more-4377.)